Run Application#

For the complete Intel® ESDQ CLI, refer to Intel® ESDQ CLI Overview. To find the available Metro AI Suite tests, run the following command:

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-h"

You have the option to run an individual test or all tests together. The results from each test will be collated in the HTML report.

Run Full ESDQ#

Run the following commands to execute all the Metro AI Suite tests and generate the full report.

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r all"

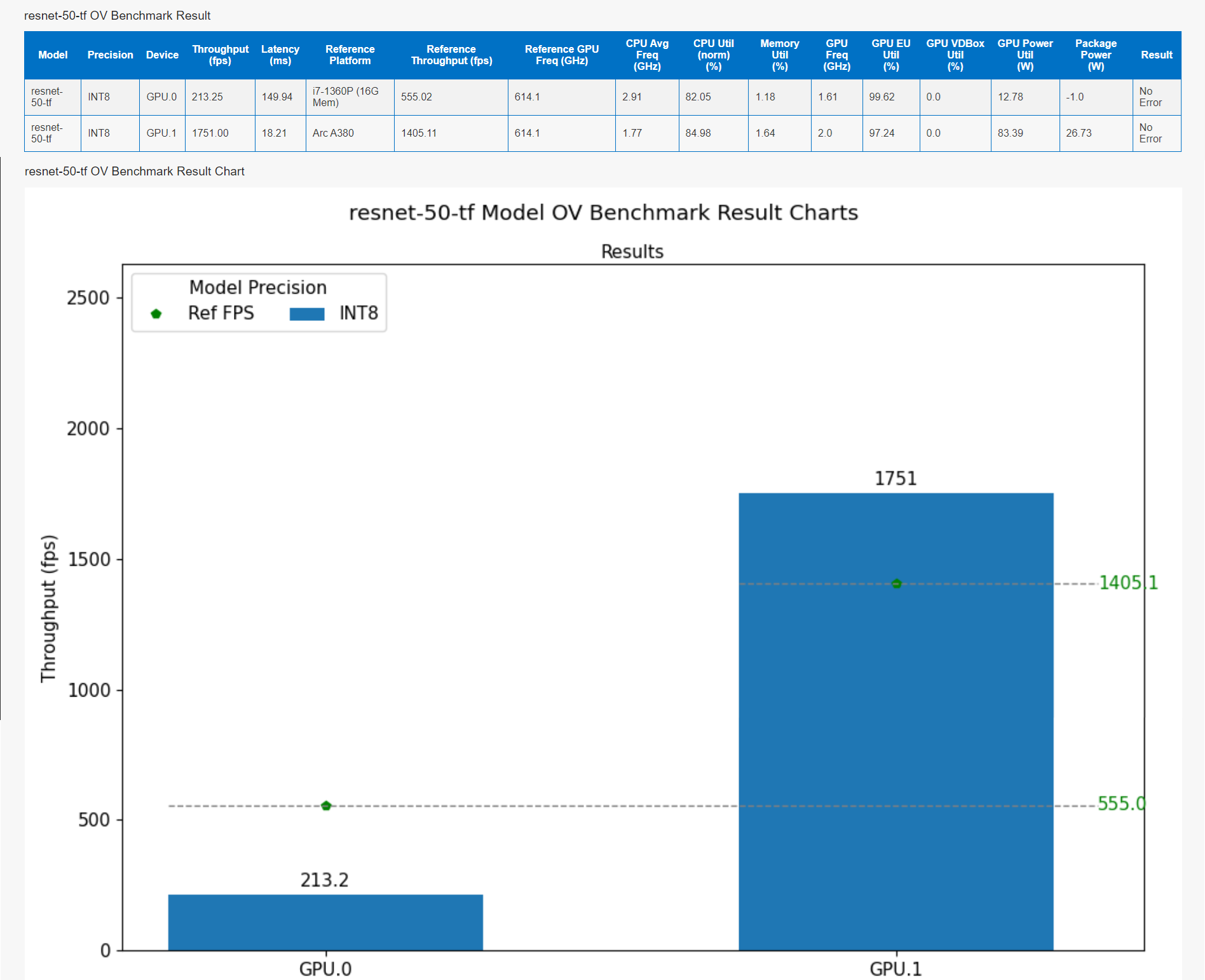

Run OpenVINO Benchmark#

The OpenVINO benchmark measures the performance of commonly used neural network models on the platform. The following models are supported:

resent-50-tf

ssdlite-mobilenet-v2

efficientnet-b0

yolo-v5s

yolo-v8s

mobilenet_v2

clip-vit-base-patch16

Note: yolo-v8s is skipped for NPU device on Intel® Core™ Ultra platform because they can’t run on NPU device for now.

The following OVRunner runner commands benchmark all the models using dGPU for 180 seconds.

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r OVRunner -d GPU.0 -t 180"

OVRunner parameters:

-d {DEVICE: CPU NPU GPU.0 MULTI:CPU,NPU,GPU.0}specifies the device type.-t <seconds>specifies the benchmark duration in seconds.-m {resnet-50-tf, ssdlite_mobilenet_v2, efficientnet-b0, yolo-v5s, yolo-v8s, mobilenet-v2-pytorch, clip-vit-base-patch16}specifies model. Do not include this parameter to run all models.-p {INT8, FP16}specifies model precision

The following is the example of resnet-50-tf benchmark result:

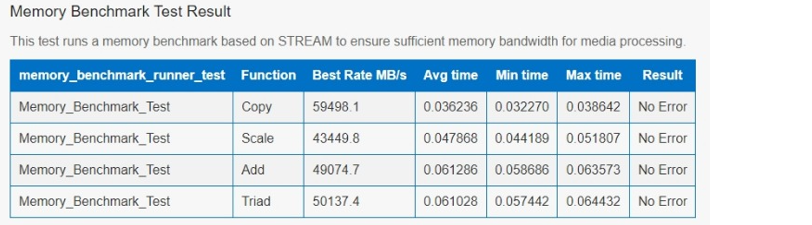

Run Memory Benchmark#

The memory benchmark measures the sustained memory bandwidth based on STREAM. To measure memory bandwidth for media processing, invoke the MemBenchmark runner:

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r MemBenchmark"

After the MemBenchmark runner completes, the location of the test report is displayed. The following is the example report:

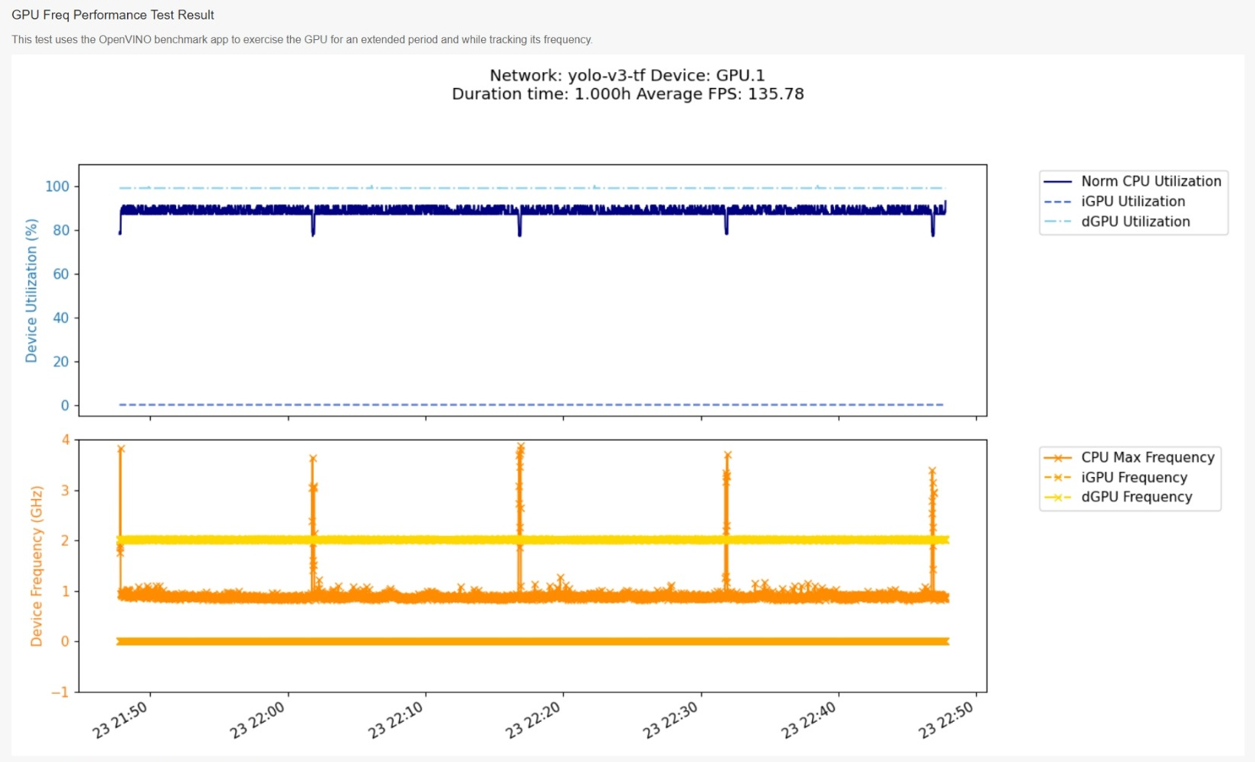

Run AI Frequency Measurement#

The AI inference frequency benchmark was designed to stress the GPU for an extended period. The benchmark records the GPU frequency while it runs an inference workload using the OpenVINO™ Benchmark Tool.

The following FreqRunner runner command measures GPU frequency for 1 hours.

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r FreqRunner -t 1"

FreqRunner parameters:

-t <hrs>specifies the benchmark duration in hours.

The following is an example plot:

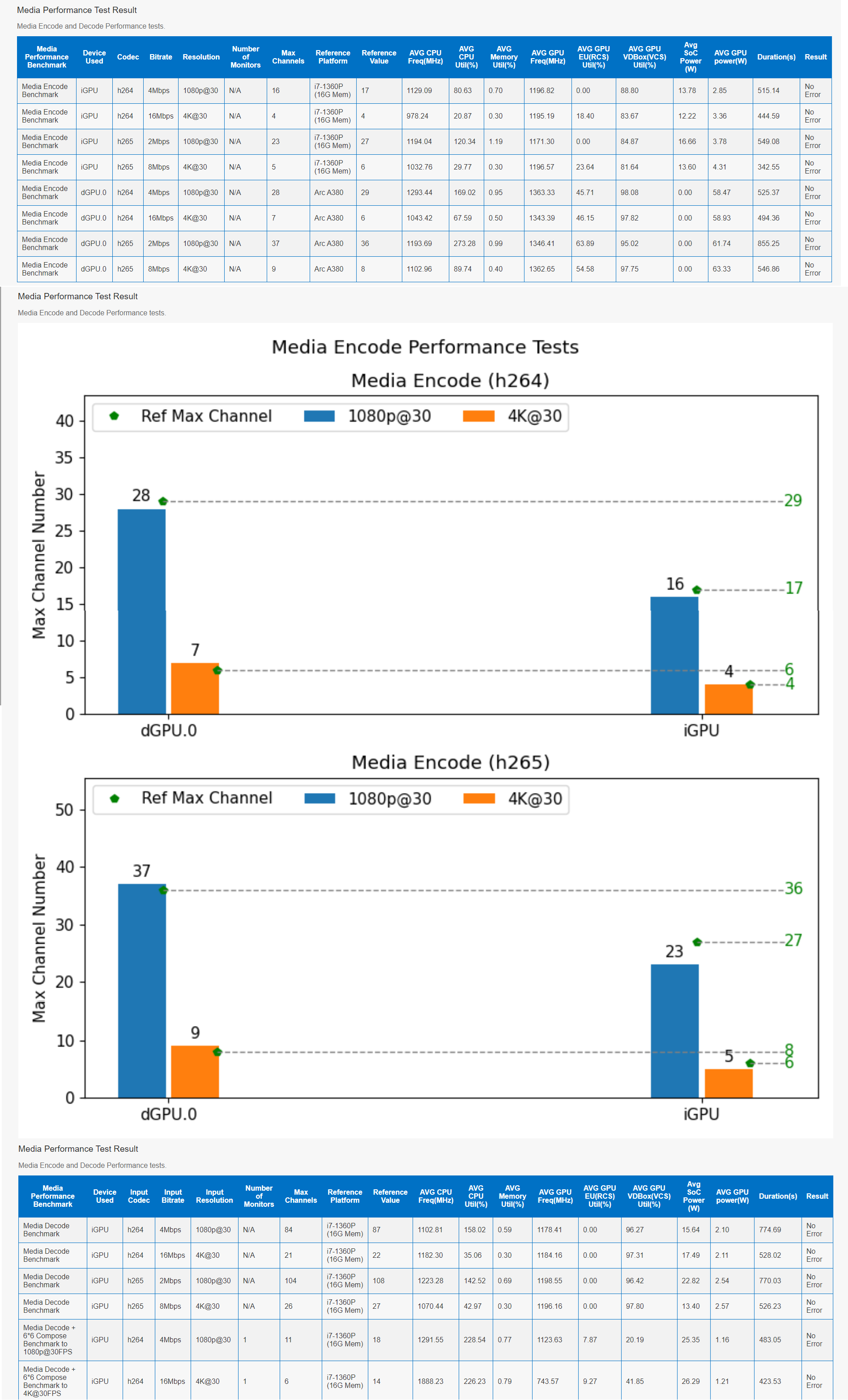

Run Media Performance Benchmark#

The following Media Runner runner command invokes this benchmark for all video codec (H264, H265) and resolution (1080p, 4K) on all available devices (iGPU on Intel® Core™ and Intel® Core™ Ultra platform, dGPU, CPU on Intel® Xeon® platform).

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r MediaRunner"

The following is the example report:

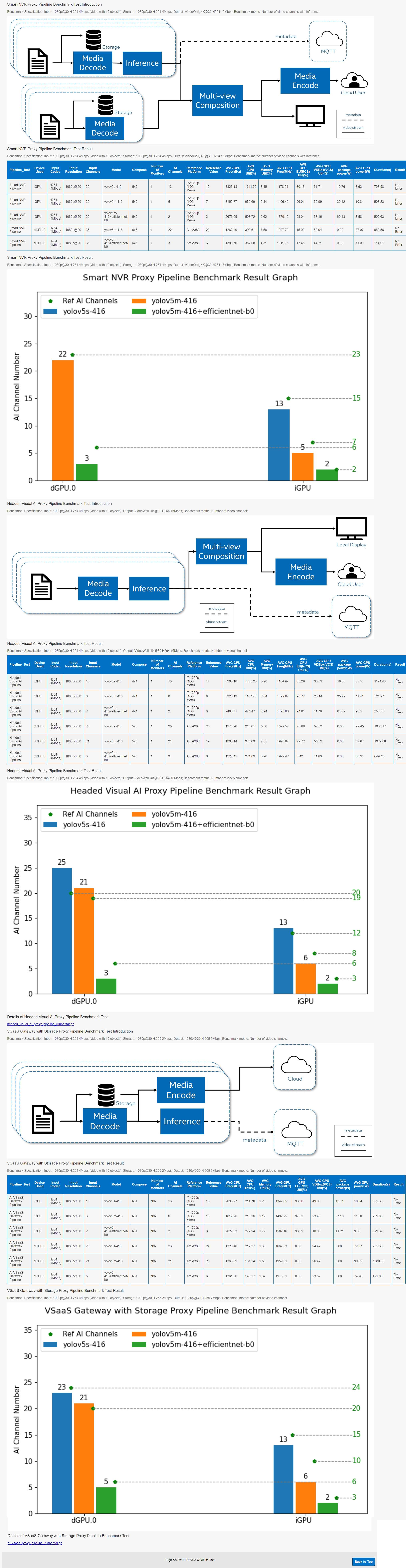

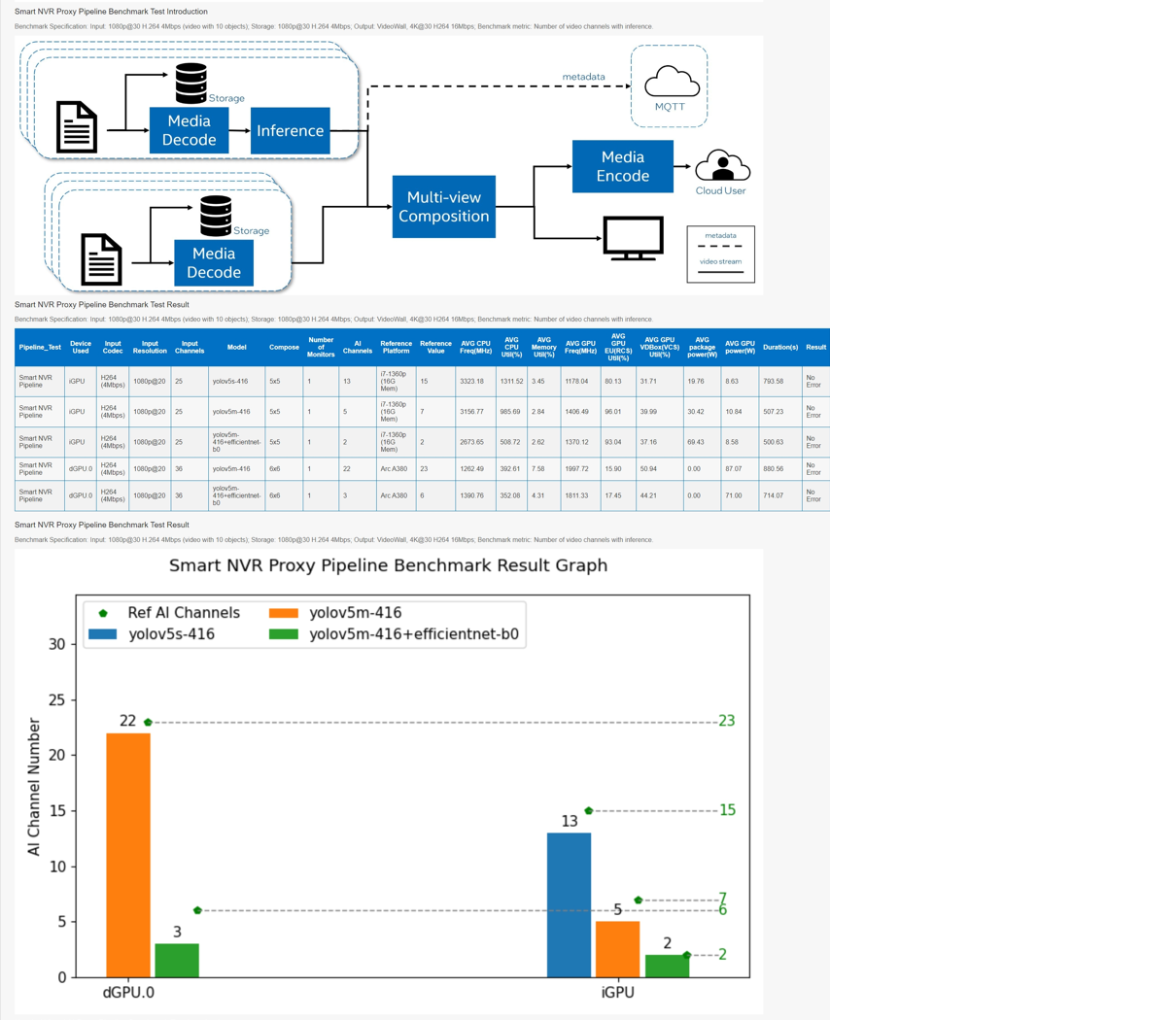

Run Smart NVR Proxy Pipeline Benchmark#

Smart NVR pipeline benchmark contains the following process:

Decodes 25 1080p H264 video channels

Stores video stream in local storage

Runs AI Inference on a subset of the video channels

Composes decoded video channels into multi-view 4K video wall stream

Encodes the multi-view video wall stream for remote viewing

Display the multi-view video wall streams on attached monitor (4k monitor is preferred)

The following command runs the pipeline benchmark:

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r SmartAIRunner"

The following is the example report:

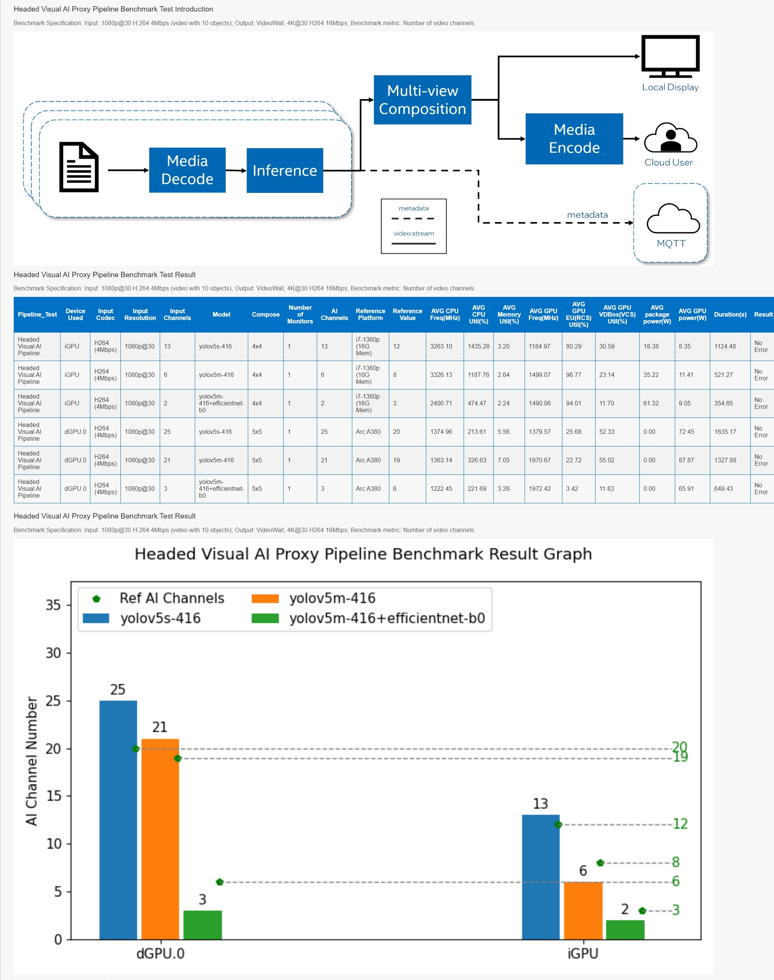

Run Headed Visual AI Proxy Pipeline Benchmark#

Headed Visual AI Proxy Pipeline contains the following process:

Decodes a specified number of 1080p video channels

Runs AI Inference on all video channels

Composes all video channels into a multi-view video wall stream

Encodes the multi-view video wall stream for remote viewing

Display the multi-view video wall stream on attached monitor (4K monitor is preferred)

The following command runs the pipeline benchmark:

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r VisualAIRunner"

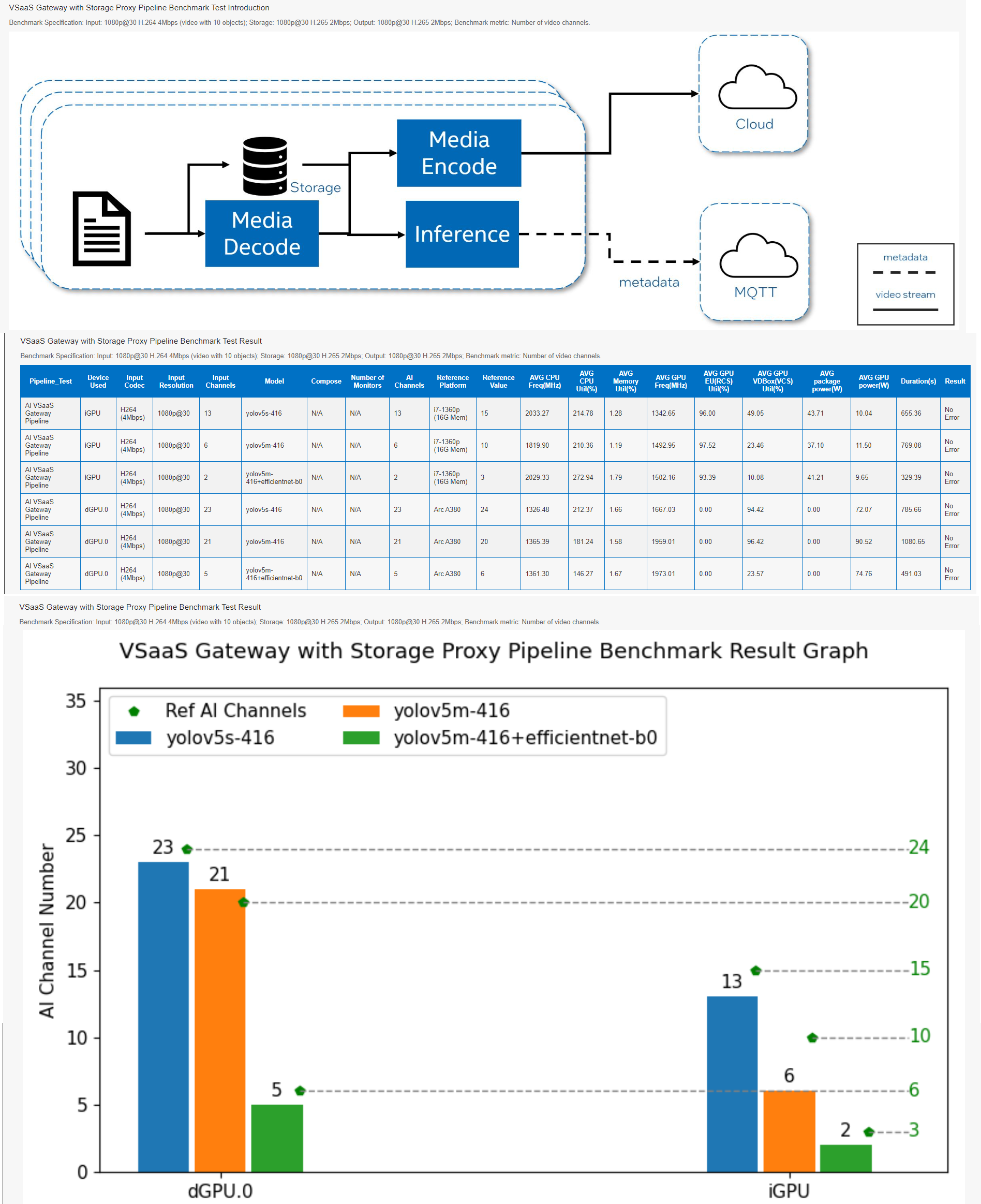

Run VSaaS Gateway with Storage and AI Proxy Pipeline Benchmark#

VSaaS Gateway with Storage and AI Proxy Pipeline contains the following process:

Decode a specified number of 1080p h264 video channels

Stores video stream in local storage

Runs AI inference on all video channels

Transcodes the video channels to h265 for remote consumption

The following command runs the pipeline benchmark:

cd Metro_AI_Suite_Device_Qualification/Metro_AI_Suite_Device_Qualification_for_Hardware_Builder_1.5

esdq --verbose module run metro-device-qualification --arg "-r VsaasAIRunner"

Sample Full ESDQ Report#