Advanced User Guide#

1. Introduction#

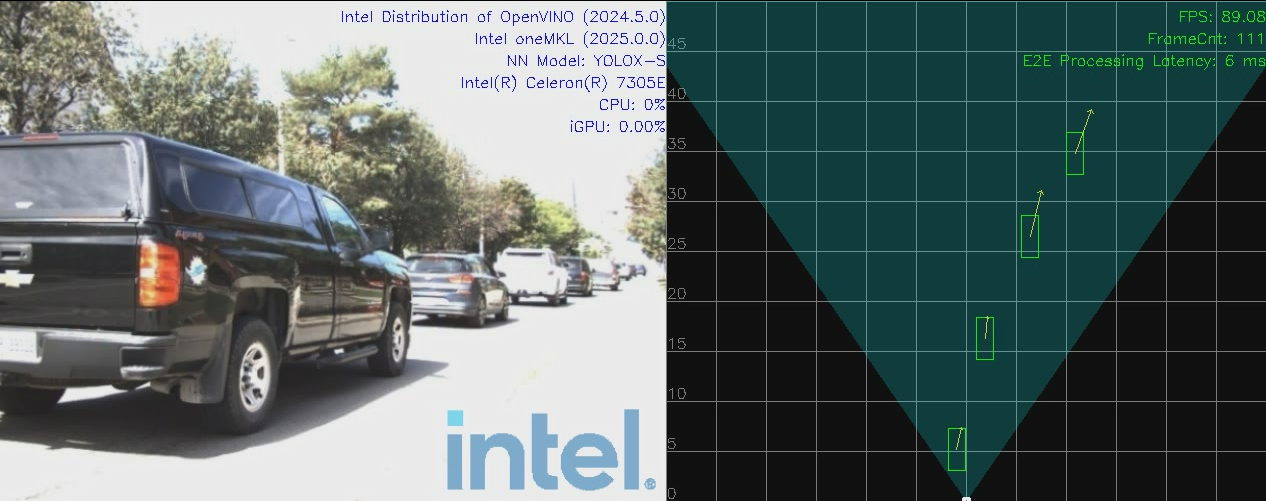

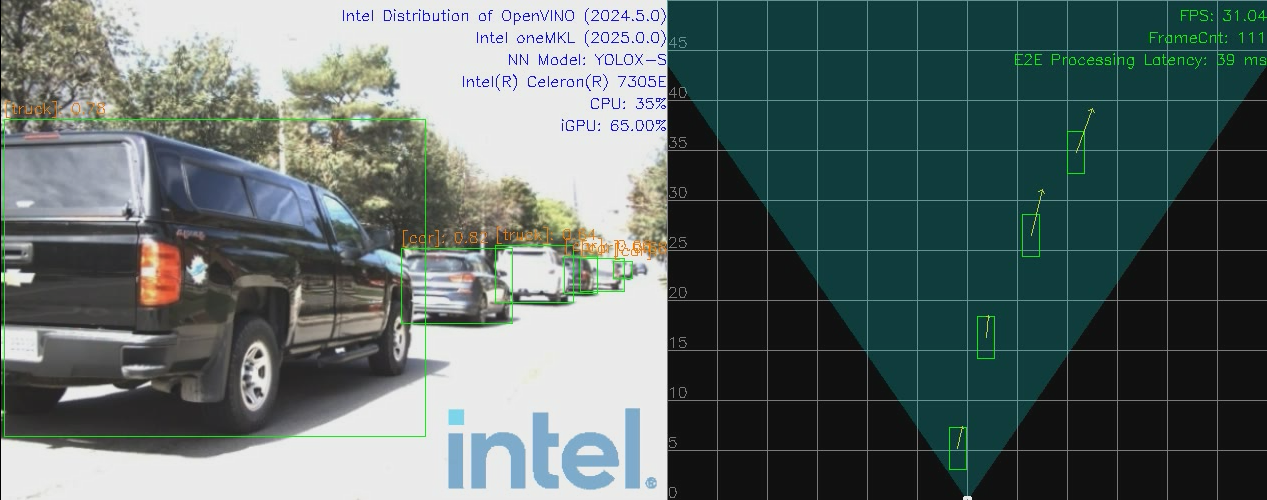

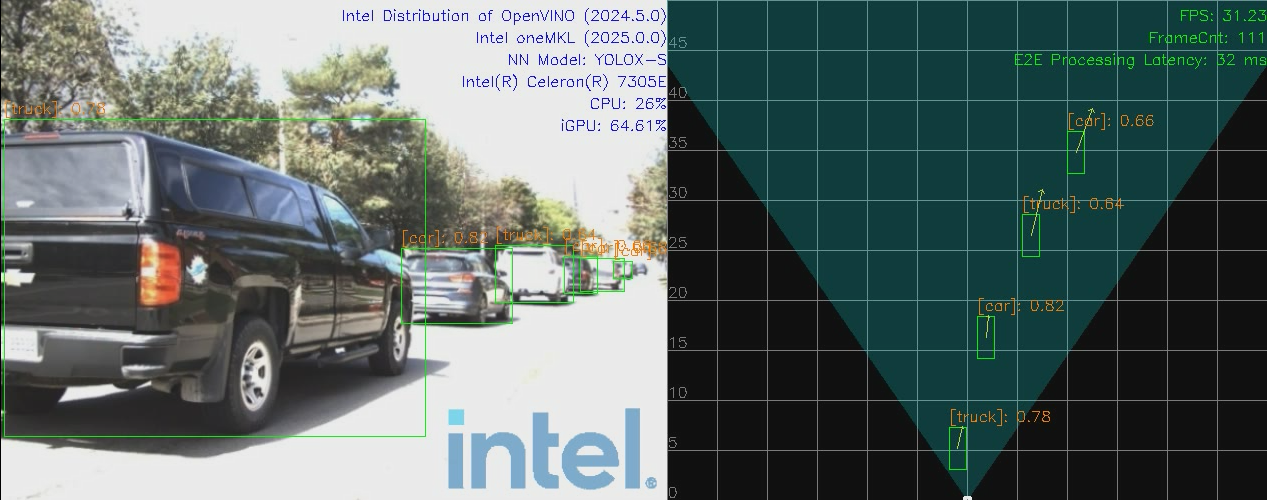

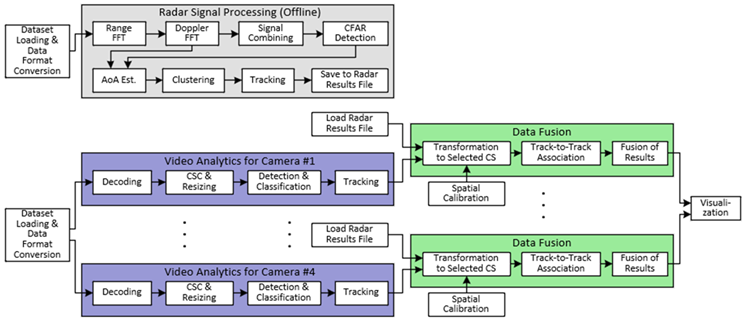

In this document we present an Intel® software reference implementation (hereinafter abbreviated as SW RI) of Metro AI Suite Sensor Fusion for Traffic Management, which is integrated sensor fusion of camera and mmWave radar (a.k.a. ISF “C+R” or AIO “C+R”). The detailed steps of running this SW RI on NEPRA base platform are also described.

The internal project code name is “Garnet Park”.

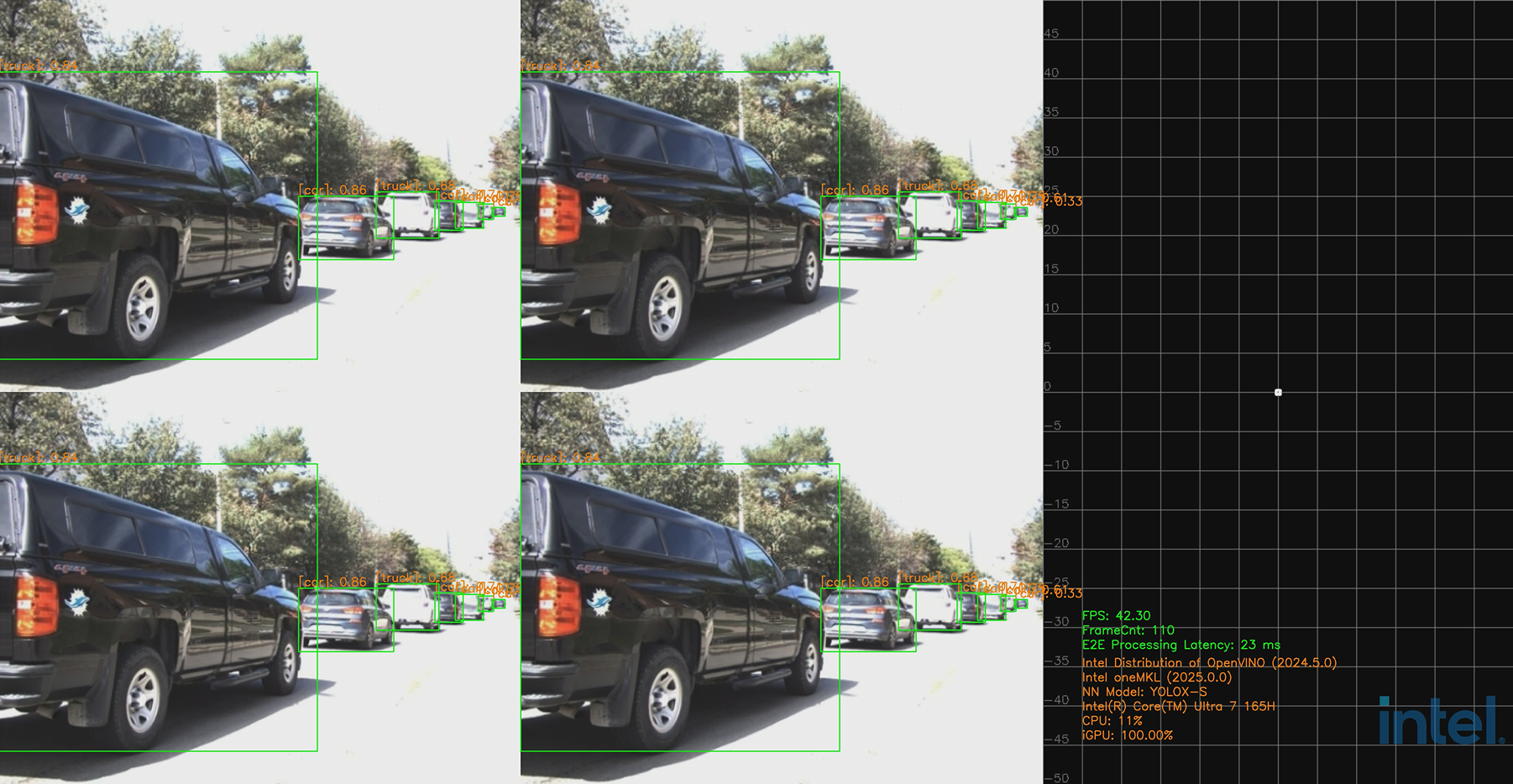

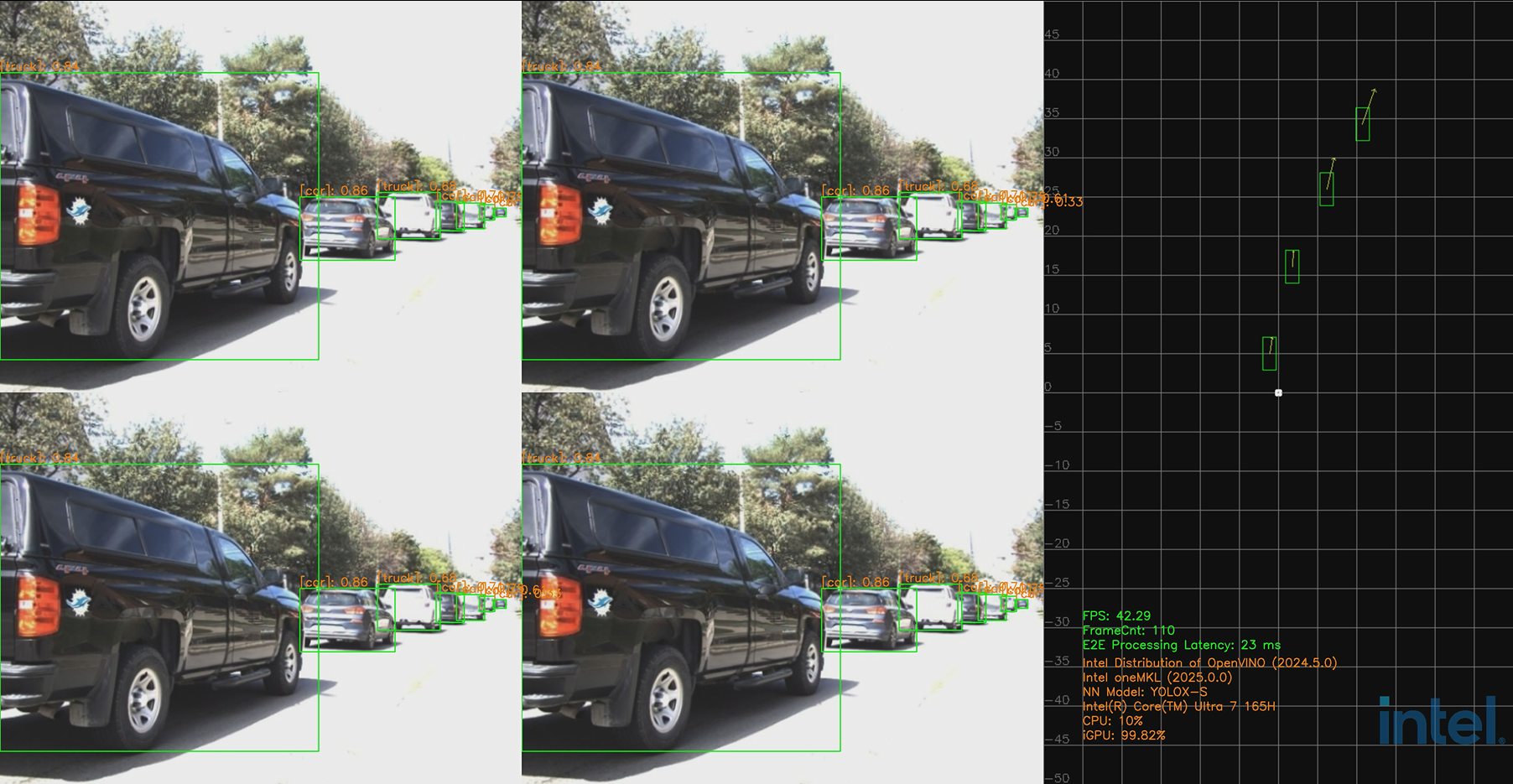

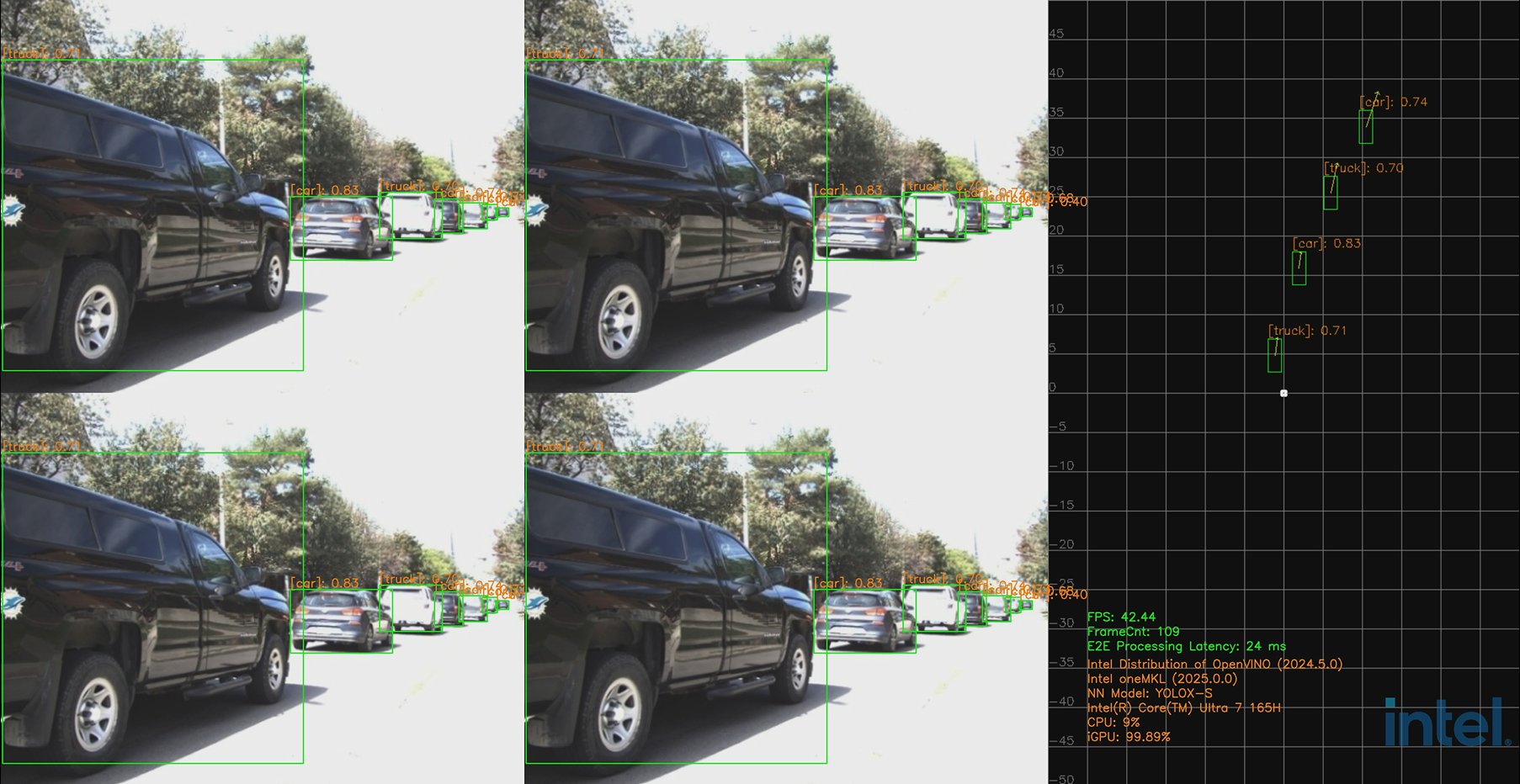

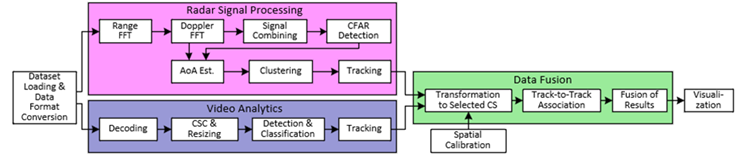

As shown in Fig.1, the E2E pipeline of this SW RI includes the following major blocks (workloads):

Dataset loading and data format conversion

Radar signal processing

Video analytics

Data fusion

Visualization

All the above workloads of this SW RI can run on single Intel SoC processor which provides all the required heterogeneous computing capabilities. To maximize its performance on Intel processors, we optimized this SW RI using Intel SW tool kits in addition to open-source SW libraries.

1.1 Prerequisites#

Intel® Distribution of OpenVINO™ Toolkit

Version: 2024.5

RADDet dataset

Platform

Intel® Celeron® Processor 7305E (1C+1R usecase)

Intel® Core™ Ultra 7 Processor 165H (4C+4R usecase)

1.2 Modules#

AI Inference Service:

Media Processing (Camera)

Radar Processing (mmWave Radar)

Sensor Fusion

Demo Application

1.2.1 AI Inference Service#

AI Inference Service is based on the HVA pipeline framework. In this SW RI, it includes the functions of DL inference, radar signal processing, and data fusion.

AI Inference Service exposes both RESTful API and gRPC API to clients, so that a pipeline defined and requested by a client can be run within this service.

RESTful API: listens to port 50051

gRPC API: listens to port 50052

vim $PROJ_DIR/ai_inference/source/low_latency_server/AiInference.config

...

[HTTP]

address=0.0.0.0

RESTfulPort=50051

gRPCPort=50052

1.2.2 Demo Application#

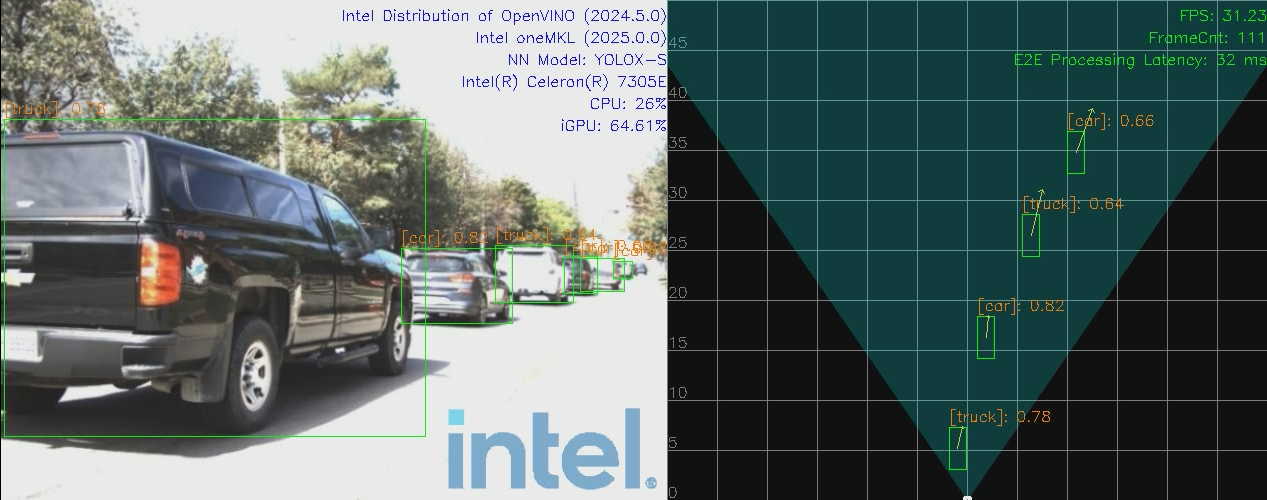

Currently we support four display types: media, radar, media_radar, media_fusion.

2. Prerequisites#

Perform a fresh installation of Ubuntu* Desktop 24.04 on the target system.

Configure your proxy

export http_proxy=<Your-Proxy> export https_proxy=<Your-Proxy>

3. Install the Package and Build the Project#

3.1 Install the package#

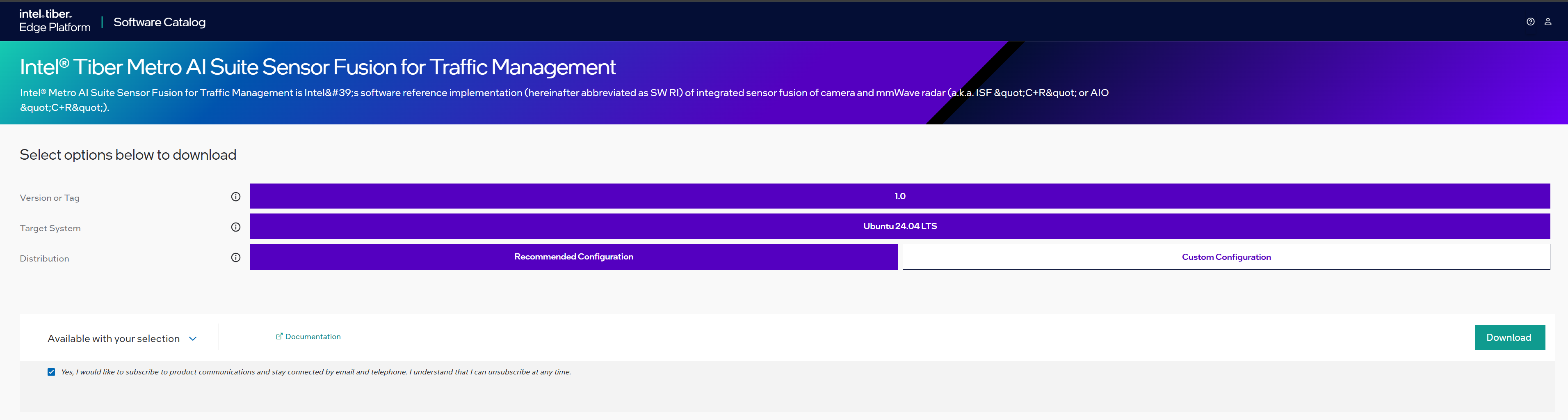

Select download to download the Intel® Metro AI Suite Sensor Fusion for Traffic Management package.

Figure 2. Download Page Click Download. In the next screen, accept the license agreement and copy the Product Key.

Transfer the downloaded package to the target Ubuntu* system and unzip:

unzip Metro-AI-Suite-Sensor-Fusion-for-Traffic-Management.zipNote: Please use the same credential that was created during Ubuntu installation to proceed with the installation for Intel® Metro AI Suite Sensor Fusion for Traffic Management.

Go to the

Metro-AI-Suite-Sensor-Fusion-for-Traffic-Management/directory:cd Metro-AI-Suite-Sensor-Fusion-for-Traffic-Management

Prepare python venv environment

sudo apt-get install python3.12-venv python3 -m venv venv source venv/bin/activate

Change the permission of the executable

edgesoftwarefile:chmod 755 edgesoftware

Install the Intel® Tiber Metro AI Suite Sensor Fusion for Traffic Management package:

./edgesoftware installWhen prompted, enter the Product Key. You can enter the Product Key mentioned in the email from Intel confirming your download (or the Product Key you copied in Step 2).

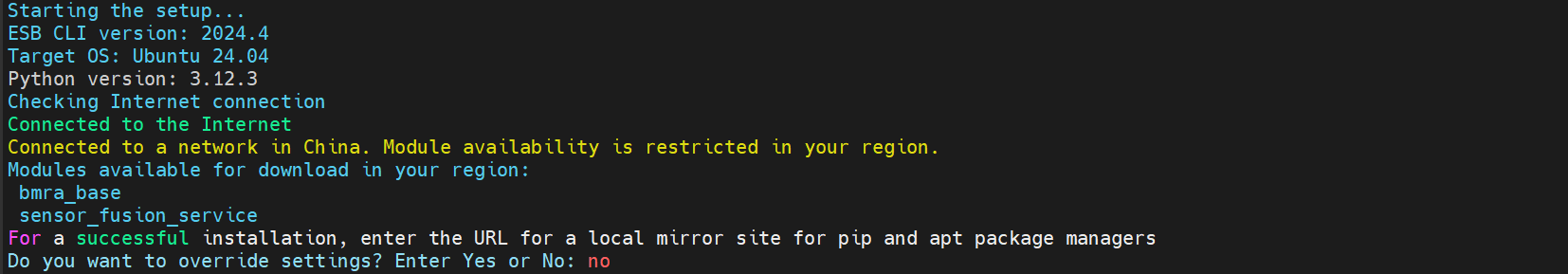

Note for People’s Republic of China (PRC) Network:

If you are connecting from the PRC network, the following prompt will appear during

bmra baseinstallation:

Figure 3. Prompt to Enable PRC Network Mirror Type No to use the default settings, or Yes to enter the local mirror URL for pip and apt package managers.

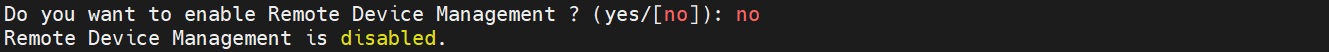

When prompted for the Remote Device Management feature, type no

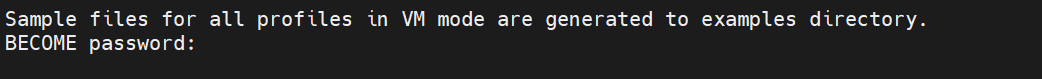

Figure 4. Prompt to Enable RDM When prompted for the BECOME password, enter your Linux* account password.

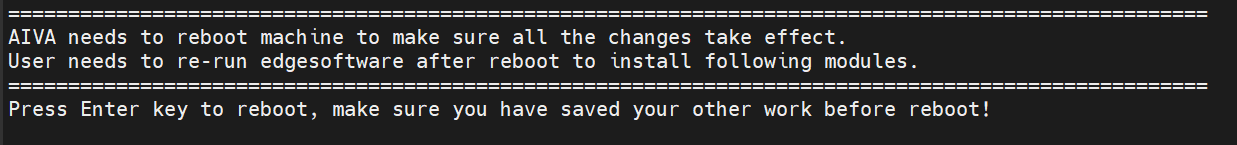

Figure 5. Prompt for BECOME Password When prompted to reboot the machine, press Enter. Ensure to save your work before rebooting.

Figure 6. Prompt to Reboot After rebooting, resume the installation:

cd Metro-AI-Suite-Sensor-Fusion-for-Traffic-Management ./edgesoftware install

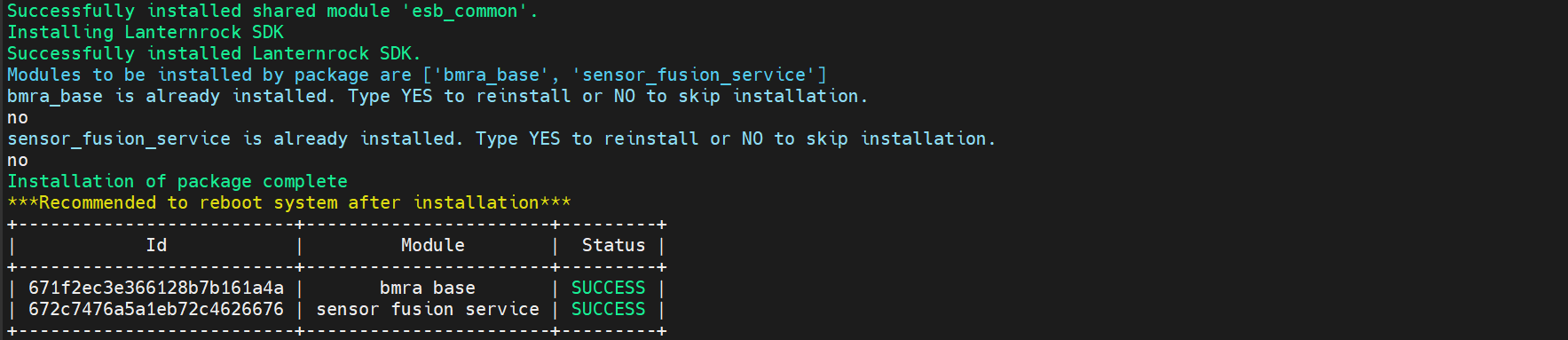

When the installation is complete, you will see the message “Installation of package complete” and the installation status for each module.

Figure 7. Installation Complete Message

3.2 Install Dependencies and Build Project#

Install 3rd party libs

cd Metro_AI_Suite_Sensor_Fusion_for_Traffic_Management_metro-1.0/sensor_fusion_service bash install_3rd.sh

set $PROJ_DIR

cd Metro_AI_Suite_Sensor_Fusion_for_Traffic_Management_metro/sensor_fusion_service export PROJ_DIR=$PWD

prepare global radar configs in folder: /opt/datasets

sudo ln -s $PROJ_DIR/ai_inference/deployment/datasets /opt/datasets

prepare models in folder: /opt/models

sudo ln -s $PROJ_DIR/ai_inference/deployment/models /opt/models

prepare offline radar results for 4C4R:

sudo mv $PROJ_DIR/ai_inference/deployment/datasets/radarResults.csv /opt

build project

bash -x build.sh

4. Download and Convert Dataset#

For how to get RADDet dataset, please refer to this guide: How To Get RADDET Dataset section

Upon success, bin files will be extracted, save to $DATASET_ROOT/bin_files_{VERSION}:

NOTE: latest converted dataset version should be: v1.0

5. Run Sensor Fusion Application#

In this section, we describe how to run Intel® Metro AI Suite Sensor Fusion for Traffic Management application.

Intel® Metro AI Suite Sensor Fusion for Traffic Management application can support different pipeline using topology JSON files to describe the pipeline topology. The defined pipeline topology can be found at sec 5.1 Resources Summary

There are two steps required for running the sensor fusion application:

Start AI Inference service, more details can be found at sec 5.2 Start Service

Run the application entry program, more details can be found at sec 5.3 Run Entry Program

Besides, users can test each component (without display) following the guides at sec 5.4 Run Unit Tests

5.1 Resources Summary#

Local File Pipeline for Media pipeline

Json File: localMediaPipeline.json

File location: ai_inference/test/configs/raddet/1C1R/localMediaPipeline.jsonPipeline Description:

input -> decode -> detection -> tracking -> output

Local File Pipeline for mmWave Radar pipeline

Json File: localRadarPipeline.json

File location: ai_inference/test/configs/raddet/1C1R/localRadarPipeline.jsonPipeline Description:

input -> preprocess -> radar_detection -> clustering -> tracking -> output

Local File Pipeline for

Camera + Radar(1C+1R)Sensor fusion pipelineJson File: localFusionPipeline.json

File location: ai_inference/test/configs/raddet/1C1R/localFusionPipeline.jsonPipeline Description:

input | -> decode -> detector -> tracker -> | | -> preprocess -> radar_detection -> clustering -> tracking -> | -> coordinate_transform->fusion -> output

Local File Pipeline for

Camera + Radar(4C+4R)Sensor fusion pipelineJson File: localFusionPipeline.json

File location: ../../ai_inference/test/configs/raddet/4C4R/localFusionPipeline.jsonPipeline Description:

input | -> decode -> detector -> tracker -> | | -> radarOfflineResults -> | -> coordinate_transform->fusion -> | input | -> decode -> detector -> tracker -> | | | -> radarOfflineResults -> | -> coordinate_transform->fusion -> | -> output input | -> decode -> detector -> tracker -> | | | -> radarOfflineResults -> | -> coordinate_transform->fusion -> | input | -> decode -> detector -> tracker -> | | | -> radarOfflineResults -> | -> coordinate_transform->fusion -> |

5.2 Start Service#

Open a terminal, run the following commands:

cd $PROJ_DIR

sudo bash -x run_service_bare.sh

# Output logs:

[2023-06-26 14:34:42.970] [DualSinks] [info] MaxConcurrentWorkload sets to 1

[2023-06-26 14:34:42.970] [DualSinks] [info] MaxPipelineLifeTime sets to 300s

[2023-06-26 14:34:42.970] [DualSinks] [info] Pipeline Manager pool size sets to 1

[2023-06-26 14:34:42.970] [DualSinks] [trace] [HTTP]: uv loop inited

[2023-06-26 14:34:42.970] [DualSinks] [trace] [HTTP]: Init completed

[2023-06-26 14:34:42.971] [DualSinks] [trace] [HTTP]: http server at 0.0.0.0:50051

[2023-06-26 14:34:42.971] [DualSinks] [trace] [HTTP]: running starts

[2023-06-26 14:34:42.971] [DualSinks] [info] Server set to listen on 0.0.0.0:50052

[2023-06-26 14:34:42.972] [DualSinks] [info] Server starts 1 listener. Listening starts

[2023-06-26 14:34:42.972] [DualSinks] [trace] Connection handle with uid 0 created

[2023-06-26 14:34:42.972] [DualSinks] [trace] Add connection with uid 0 into the conn pool

NOTE-1: workload (default as 1) can be configured in file:

$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.config

...

[Pipeline]

maxConcurrentWorkload=1

NOTE-2 : to stop service, run the following commands:

sudo pkill Hce

5.3 Run Entry Program#

5.3.1 1C+1R#

All executable files are located at: $PROJ_DIR/build/bin

Usage:

Usage: CRSensorFusionDisplay <host> <port> <json_file> <total_stream_num> <repeats> <data_path> <display_type> [<save_flag: 0 | 1>] [<pipeline_repeats>] [<fps_window: unsigned>] [<cross_stream_num>] [<warmup_flag: 0 | 1>] [<logo_flag: 0 | 1>]

--------------------------------------------------------------------------------

Environment requirement:

unset http_proxy;unset https_proxy;unset HTTP_PROXY;unset HTTPS_PROXY

host: use

127.0.0.1to call from localhost.port: configured as

50052, can be changed by modifying file:$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.configbefore starting the service.json_file: AI pipeline topology file.

total_stream_num: to control the input streams.

repeats: to run tests multiple times, so that we can get more accurate performance.

data_path: multi-sensor binary files folder for input.

display_type: support for

media,radar,media_radar,media_fusioncurrently.save_flag: whether to save display results into video.

pipeline_repeats: pipeline repeats number.

fps_window: The number of frames processed in the past is used to calculate the fps. 0 means all frames processed are used to calculate the fps.

cross_stream_num: the stream number that run in a single pipeline.

warmup_flag: warm up flag before pipeline start.

logo_flag: whether to add intel logo in display.

More specifically, open another terminal, run the following commands:

# multi-sensor inputs test-case

sudo -E ./build/bin/CRSensorFusionDisplay 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localFusionPipeline_libradar.json 1 1 /path-to-dataset media_fusion

Note: Run with

rootif users want to get the GPU utilization profiling.

5.3.2 1C+1R Unit Tests#

In this section, the unit tests of four major components will be described: media processing, radar processing, fusion pipeline without display and other tools for intermediate results.

Usage:

Usage: testGRPCLocalPipeline <host> <port> <json_file> <total_stream_num> <repeats> <data_path> <media_type> [<pipeline_repeats>] [<cross_stream_num>] [<warmup_flag: 0 | 1>]

--------------------------------------------------------------------------------

Environment requirement:

unset http_proxy;unset https_proxy;unset HTTP_PROXY;unset HTTPS_PROXY

host: use

127.0.0.1to call from localhost.port: configured as

50052, can be changed by modifying file:$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.configbefore starting the service.json_file: AI pipeline topology file.

total_stream_num: to control the input video streams.

repeats: to run tests multiple times, so that we can get more accurate performance.

abs_data_path: input data, remember to use absolute data path, or it may cause error.

media_type: support for

image,video,multisensorcurrently.pipeline_repeats: the pipeline repeats number.

cross_stream_num: the stream number that run in a single pipeline.

5.3.2.1 Unit Test: Media Processing#

Open another terminal, run the following commands:

# media test-case

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/localMediaPipeline.json 1 1 /path-to-dataset multisensor

5.3.2.2 Unit Test: Radar Processing#

Open another terminal, run the following commands:

# radar test-case

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_libradar.json 1 1 /path-to-dataset multisensor

5.3.2.3 Unit Test: Fusion pipeline without display#

Open another terminal, run the following commands:

# fusion test-case

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localFusionPipeline_libradar.json 1 1 /path-to-dataset multisensor

5.3.2.4 GPU VPLDecode test#

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/gpuLocalVPLDecodeImagePipeline.json 1 1000 $PROJ_DIR/ai_inference/test/demo/images image

5.3.2.5 Media model inference visualization#

./build/bin/MediaDisplay 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/localMediaPipeline.json 1 1 /path-to-dataset multisensor

5.3.2.6 Radar pipeline with radar pcl as output#

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_pcl_libradar.json 1 1 /path-to-dataset multisensor

5.3.2.7 Save radar pipeline tracking results#

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_saveResult_libradar.json 1 1 /path-to-dataset multisensor

5.3.2.8 Save radar pipeline pcl results#

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_savepcl_libradar.json 1 1 /path-to-dataset multisensor

5.3.2.9 Save radar pipeline clustering results#

./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_saveClustering_libradar.json 1 1 /path-to-dataset multisensor

5.3.2.10 Test radar pipeline performance#

## no need to run the service

export HVA_NODE_DIR=$PWD/build/lib

source /opt/intel/openvino_2024/setupvars.sh

source /opt/intel/oneapi/setvars.sh

./build/bin/testRadarPerformance ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_libradar.json /path-to-dataset 1

5.3.2.11 Radar pcl results visualization#

./build/bin/CRSensorFusionRadarDisplay 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_savepcl_libradar.json 1 1 /path-to-dataset pcl

5.3.2.12 Radar clustering results visualization#

./build/bin/CRSensorFusionRadarDisplay 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_saveClustering_libradar.json 1 1 /path-to-dataset clustering

5.3.2.13 Radar tracking results visualization#

./build/bin/CRSensorFusionRadarDisplay 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localRadarPipeline_libradar.json 1 1 /path-to-dataset tracking

5.3.3 4C+4R#

All executable files are located at: $PROJ_DIR/build/bin

Usage:

Usage: CRSensorFusion4C4RDisplay <host> <port> <json_file> <additional_json_file> <total_stream_num> <repeats> <data_path> <display_type> [<save_flag: 0 | 1>] [<pipeline_repeats>] [<cross_stream_num>] [<warmup_flag: 0 | 1>] [<logo_flag: 0 | 1>]

--------------------------------------------------------------------------------

Environment requirement:

unset http_proxy;unset https_proxy;unset HTTP_PROXY;unset HTTPS_PROXY

host: use

127.0.0.1to call from localhost.port: configured as

50052, can be changed by modifying file:$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.configbefore starting the service.json_file: AI pipeline topology file.

additional_json_file: AI pipeline additional topology file.

total_stream_num: to control the input streams.

repeats: to run tests multiple times, so that we can get more accurate performance.

data_path: multi-sensor binary files folder for input.

display_type: support for

media,radar,media_radar,media_fusioncurrently.save_flag: whether to save display results into video.

pipeline_repeats: pipeline repeats number.

cross_stream_num: the stream number that run in a single pipeline.

warmup_flag: warm up flag before pipeline start.

logo_flag: whether to add intel logo in display.

More specifically, open another terminal, run the following commands:

# multi-sensor inputs test-case

sudo -E ./build/bin/CRSensorFusion4C4RDisplay 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/localFusionPipeline_npu.json 4 1 /path-to-dataset media_fusion

Note: Run with

rootif users want to get the GPU utilization profiling.

To run 4C+4R with cross-stream support, for example, process 3 streams on GPU with 1 thread and the other 1 stream on NPU in another thread, run the following command:

# multi-sensor inputs test-case

sudo -E ./build/bin/CRSensorFusion4C4RDisplayCrossStream 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/cross-stream/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/cross-stream/localFusionPipeline_npu.json 4 1 /path-to-dataset media_fusion save_flag 1 3

For the command above, if you encounter problems with opencv due to remote connection, you can try running the following command which sets the save flag to 2 meaning that the video will be saved locally without needing to show on the screen:

# multi-sensor inputs test-case

sudo -E ./build/bin/CRSensorFusion4C4RDisplayCrossStream 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/cross-stream/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/cross-stream/localFusionPipeline_npu.json 4 1 /path-to-dataset media_fusion 2 1 3

5.3.4 4C+4R Unit Tests#

In this section, the unit tests of two major components will be described: fusion pipeline without display and media processing.

Usage:

Usage: testGRPC4C4RPipeline <host> <port> <json_file> <additional_json_file> <total_stream_num> <repeats> <data_path> [<cross_stream_num>] [<warmup_flag: 0 | 1>]

--------------------------------------------------------------------------------

Environment requirement:

unset http_proxy;unset https_proxy;unset HTTP_PROXY;unset HTTPS_PROXY

host: use

127.0.0.1to call from localhost.port: configured as

50052, can be changed by modifying file:$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.configbefore starting the service.json_file: AI pipeline topology file.

additional_json_file: AI pipeline additional topology file.

total_stream_num: to control the input video streams.

repeats: to run tests multiple times, so that we can get more accurate performance.

data_path: input data, remember to use absolute data path, or it may cause error.

cross_stream_num: the stream number that run in a single pipeline.

warmup_flag: warm up flag before pipeline start.

Set offline radar CSV file path

First, set the offline radar CSV file path in both localFusionPipeline.json File location: ai_inference/test/configs/raddet/4C4R/localFusionPipeline.json and localFusionPipeline_npu.json File location: ai_inference/test/configs/raddet/4C4R/localFusionPipeline_npu.json with “Configure String”: “RadarDataFilePath=(STRING)/opt/radarResults.csv” like below:

{

"Node Class Name": "RadarResultReadFileNode",

......

"Configure String": "......;RadarDataFilePath=(STRING)/opt/radarResults.csv"

},

The method for generating offline radar files is described in 5.3.2.7 Save radar pipeline tracking results. Or you can use a pre-prepared data with the command below:

sudo mv $PROJ_DIR/ai_inference/deployment/datasets/radarResults.csv /opt

5.3.4.1 Unit Test: Fusion Pipeline without display#

Open another terminal, run the following commands:

# fusion test-case

sudo -E ./build/bin/testGRPC4C4RPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/localFusionPipeline_npu.json 4 1 /path-to-dataset

5.3.4.2 Unit Test: Fusion Pipeline with cross-stream without display#

Open another terminal, run the following commands:

# fusion test-case

sudo -E ./build/bin/testGRPC4C4RPipelineCrossStream 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/cross-stream/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/cross-stream/localFusionPipeline_npu.json 4 1 /path-to-dataset 1 3

5.3.4.3 Unit Test: Media Processing#

Open another terminal, run the following commands:

# media test-case

sudo -E ./build/bin/testGRPC4C4RPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/localMediaPipeline.json ai_inference/test/configs/raddet/4C4R/localMediaPipeline_npu.json 4 1 /path-to-dataset

# cpu detection test-case

sudo -E ./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/UTCPUDetection-yoloxs.json 1 1 /path-to-dataset multisensor

# gpu detection test-case

sudo -E ./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/UTGPUDetection-yoloxs.json 1 1 /path-to-dataset multisensor

# npu detection test-case

sudo -E ./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/UTNPUDetection-yoloxs.json 1 1 /path-to-dataset multisensor

5.4 KPI test#

5.4.1 1C+1R#

# Run service with the following command:

sudo bash run_service_bare_log.sh

# Open another terminal, run the command below:

sudo -E ./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localFusionPipeline_libradar.json 1 10 /path-to-dataset multisensor

Fps and average latency will be calculated.

5.4.2 4C+4R#

# Run service with the following command:

sudo bash run_service_bare_log.sh

# Open another terminal, run the command below:

sudo -E ./build/bin/testGRPC4C4RPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/localFusionPipeline_npu.json 4 10 /path-to-dataset

Fps and average latency will be calculated.

5.5 Stability test#

5.5.1 1C+1R stability test#

NOTE : change workload configuration to 1 in file:

$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.config

...

[Pipeline]

maxConcurrentWorkload=1

Run the service first, and open another terminal, run the command below:

# 1C1R without display

sudo -E ./build/bin/testGRPCLocalPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/1C1R/libradar/localFusionPipeline_libradar.json 1 100 /path-to-dataset multisensor 100

5.5.2 4C+4R stability test#

NOTE : change workload configuration to 4 in file:

$PROJ_DIR/ai_inference/source/low_latency_server/AiInference.config

...

[Pipeline]

maxConcurrentWorkload=4

Run the service first, and open another terminal, run the command below:

# 4C4R without display

sudo -E ./build/bin/testGRPC4C4RPipeline 127.0.0.1 50052 ai_inference/test/configs/raddet/4C4R/localFusionPipeline.json ai_inference/test/configs/raddet/4C4R/localFusionPipeline_npu.json 4 100 /path-to-dataset 100

6. Code Reference#

Some of the code is referenced from the following projects:

IGT GPU Tools (MIT License)

Intel DL Streamer (MIT License)

Open Model Zoo (Apache-2.0 License)